The Megapixels camera application has long been the most performant camera application on the original PinePhone. I have not gotten the Megapixels application to that point alone. There have been several other contributors that have helped slowly improving performance and features of this application. Especially Benjamin has leaped it forward massively with the threaded processing code and GPU accelerated preview.

All this code has made Megapixels very fast on the PinePhone but also has made it quite a lot harder to port the application to other hardware. The code is very much overfitted for the PinePhone hardware.

Finding a better design

To address the elephant in the room, yes libcamera exists and promises to abstract this all away. I just disagree with the design tradeoffs taken with libcamera and I think that any competition would only improve the ecosystem. It can't be that libcamera got this exactly right on the first try right?

Instead of the implementation that libcamera has made that makes abstraction code in c++ for every platform I have decided to pick the method that libalsa uses for the audio abstraction in userspace.

Alsa UCM config files are selected by soundcard name and contain a set of instructions to bring the audio pipeline in the correct state for your current usecase. All the hardware specific things are not described in code but instead in plain text configuration files. I think this scales way better since it massively lowers the skill floor needed to actually mess with the system to get hardware working.

The first iteration of Megapixels has already somewhat done this. There's a config file that is picked based on the hardware model that describes the names of the device nodes in /dev so those paths don't have to be hardcoded and it describes the resolution and mode to configure. It also describes a few details about the optical path to later produce correct EXIF info for the pictures.

[device]

make=PINE64

model=PinePhone

[rear]

driver=ov5640

media-driver=sun6i-csi

capture-width=2592

capture-height=1944

capture-rate=15

capture-fmt=BGGR8

preview-width=1280

preview-height=720

preview-rate=30

preview-fmt=BGGR8

rotate=270

colormatrix=1.384,-0.3203,-0.0124,-0.2728,1.049,0.1556,-0.0506,0.2577,0.8050

forwardmatrix=0.7331,0.1294,0.1018,0.3039,0.6698,0.0263,0.0002,0.0556,0.7693

blacklevel=3

whitelevel=255

focallength=3.33

cropfactor=10.81

fnumber=3.0

iso-min=100

iso-max=64000

flash-path=/sys/class/leds/white:flash

[front]

...

This works great for the PinePhone but it has a significant drawback. Most mobile cameras require an elaborate graph of media nodes to be configured before video works, the PinePhone is the exception in that the media graph only has an input and output node so Megapixels just hardcodes that part of the hardware setup. This makes the config file practically useless for all other phones and this is also one of the reason why different devices have different forks to make Megapixels work.

So a config file that only works for a single configuration is pretty useless. Instead of making this an .ini file I've switched the design over to libconfig so I don't have to create a whole new parser and it allows for nested configuration blocks. The config file I have been using on the PinePhone with the new codebase is this:

Version = 1;

Make: "PINE64";

Model: "PinePhone";

Rear: {

SensorDriver: "ov5640";

BridgeDriver: "sun6i-csi";

FlashPath: "/sys/class/leds/white:flash";

IsoMin: 100;

IsoMax: 64000;

Modes: (

{

Width: 2592;

Height: 1944;

Rate: 15;

Format: "BGGR8";

Rotate: 270;

FocalLength: 3.33;

FNumber: 3.0;

Pipeline: (

{Type: "Link", From: "ov5640", FromPad: 0, To: "sun6i-csi", ToPad: 0},

{Type: "Mode", Entity: "ov5640", Width: 2592, Height: 1944, Format: "BGGR8"},

);

},

{

Width: 1280;

Height: 720;

Rate: 30;

Format: "BGGR8";

Rotate: 270;

FocalLength: 3.33;

FNumber: 3.0;

Pipeline: (

{Type: "Link", From: "ov5640", FromPad: 0, To: "sun6i-csi", ToPad: 0},

{Type: "Mode", Entity: "ov5640"},

);

}

);

};

Front: {

SensorDriver: "gc2145";

BridgeDriver: "sun6i-csi";

FlashDisplay: true;

Modes: (

{

Width: 1280;

Height: 960;

Rate: 60;

Format: "BGGR8";

Rotate: 90;

Mirror: true;

Pipeline: (

{Type: "Link", From: "gc2145", FromPad: 0, To: "sun6i-csi", ToPad: 0},

{Type: "Mode", Entity: "gc2145"},

);

}

);

Instead of having a hardcoded preview mode and main mode for every sensor it's now possible to make many different resolution configs. This config recreates the 2 existing modes and Megapixels now picks faster mode for the preview automatically and use higher resolution modes for the actual picture.

Every mode now also has a Pipeline block that describes the media graph as a series of commands, every line translates to one ioctl called on the right device node just like Alsa UCM files describe it as a series of amixer commands. Megapixels no longer has the implicit PinePhone pipeline so here it describes the one link it has to make between the sensor node and the csi node and it tells Megapixels to set the correct mode on the sensor node.

This simple example of the PinePhone does not really show off most of the config options so lets look at a more complicated example:

Pipeline: (

{Type: "Link", From: "imx258", FromPad: 0, To: "rkisp1_csi", ToPad: 0},

{Type: "Mode", Entity: "imx258", Format: "RGGB10P", Width: 1048, Height: 780},

{Type: "Mode", Entity: "rkisp1_csi"},

{Type: "Mode", Entity: "rkisp1_isp"},

{Type: "Mode", Entity: "rkisp1_isp", Pad: 2, Format: "RGGB8"},

{Type: "Crop", Entity: "rkisp1_isp"},

{Type: "Crop", Entity: "rkisp1_isp", Pad: 2},

{Type: "Mode", Entity: "rkisp1_resizer_mainpath"},

{Type: "Mode", Entity: "rkisp1_resizer_mainpath", Pad: 1},

{Type: "Crop", Entity: "rkisp1_resizer_mainpath", Width: 1048, Height: 768},

);

This is the preview pipeline for the PinePhone Pro. Most of the Links are already hardcoded by the kernel itself so here it only creates the link from the rear camera sensor to the csi and all the other commands are for configuring the various entities in the graph.

The Mode commands are basically doing the VIDIOC_SUBDEV_S_FMT ioctl on the device node found by the entity name. To make configuring modes on the pipeline not extremely verbose it implicitly takes the resolution, pixelformat and framerate from the main information set by the configuration block itself. Since several entities can convert the frames into another format or size it automatically cascades the new mode to the lines below it.

In the example above the 5th command sets the format to RGGB8 which means that the mode commands below it for rkisp1_resizer_mainpath also will use this mode but the rkisp1_csi mode command above it will still be operating in RGGB10P mode.

Splitting of device management code

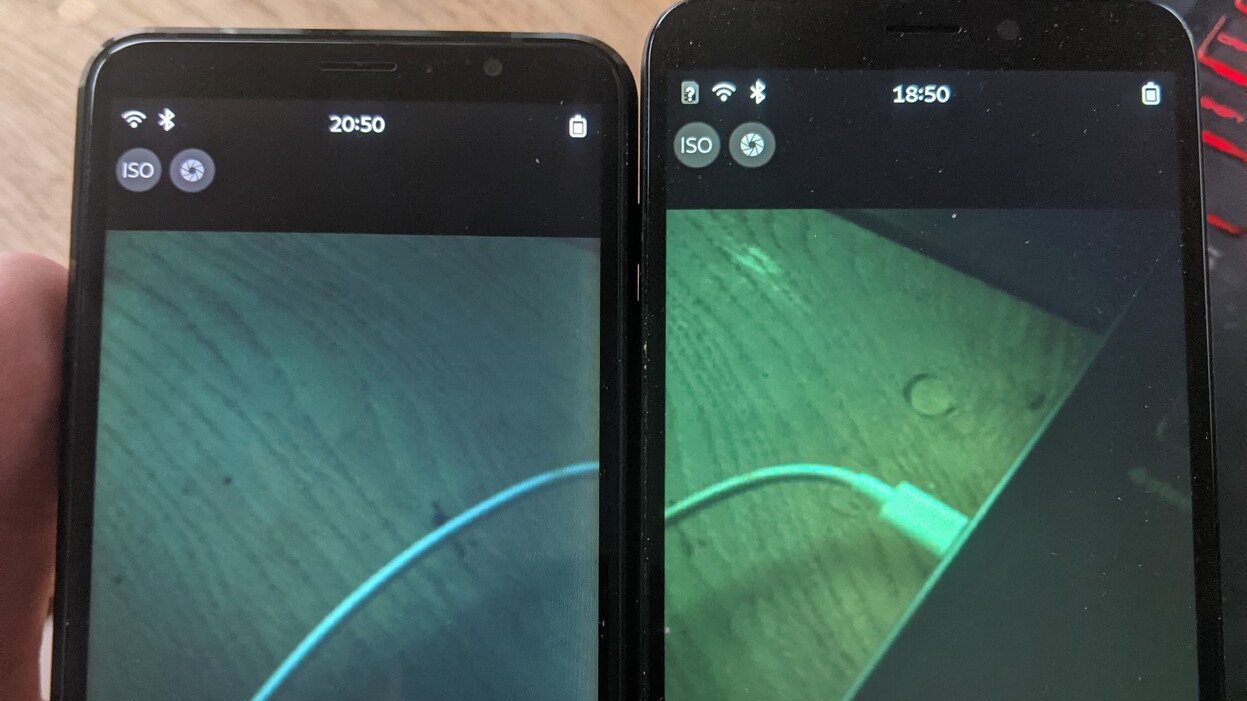

Testing changes in Megapixels is pretty hard. To develop the Megapixels code I'm building it on the phone and launching it over SSH with a bunch of environment variables set so the GTK window shows up on the phone and I get realtime logs on my computer. If there's anything that's going on after the immediate setup code it is quite hard to debug because it's in one of the three threads that process the image data.

To implement the new pipeline configuration I did that in a new empty project that builds a shared library and a few command line utilities that help test a few specific things. This codebase is libmegapixels and with it I have split off all hardware access from Megapixels itself making both these codebases a lot easier to understand.

It has been a lot easier to debug complex camera pipelines using the commandline utilities and only working on the library code. It should also make it a lot easier to make Megapixels-like applications that are not GTK4 to make it integrate more with other environments. One of the test applications for libmegapixels is getframe which is now all you need to get a raw frame from the sensor.

Since this codebase is now split into multiple parts I have put it into a seperate gitlab organisation at https://gitlab.com/megapixels-org which hopefully keeps this a bit organized.

This is also the codebase used for https://fosstodon.org/@martijnbraam/110775163438234897 which shows off libmegapixels and megapixels 2.0 running on the Librem 5.

Burnout

So now the worse part of this blog post. No you can't use this stuff yet :(

I've been working on this code for months, and now I've not been working on this code for months. I have completely burned out on all of this.

The libmegapixels code is in pretty good state but the Megapixels rewrite is still a large mess:

- Saving pictures doesn't really work and I intended to split that off to create libdng

- The QR code support is not hooked up at all at the moment

- Several pixelformats don't work correctly in the GPU decoder and I can't find out why

- Librem 5 and PinePhone Pro really need auto-exposure, auto-focus and auto-whitebalance to produce anything remotely looking like a picture. I have ported the auto-exposure from Millipixels which works reasonably well for this but got stuck on AWB and have not attempted Autofocus yet.

The mountain of work that's left to do to make this a superset of the functionality of Megapixels 1.x and the expectations surrounding it have made this pretty hard to work on. On the original Megapixel releases nothing mattered because any application that could show a single frame of the camera was already a 100% improvement over the current state.

Another issue is that whatever I do or figure out it will always be instantly be put down with "Why are you not using libcamera" and "libcamera probably fixes this".

Some things people really need to understand is that an application not using libcamera does not mean other software on the system can't support libcamera. If Firefox can use libcamera to do videocalls that's great, that's not the usecase Megapixels is going for anyway.

What also doesn't help is receiving bugreports for the PinePhone Pro while Megapixels does not support the PinePhone Pro. There's a patchset added on top to make in launch on the PinePhone Pro but there's a reason this patchset is not in Megapixels. The product of the Megapixels source with the ppp.patch added on top probably shouldn't've been distributed as Megapixels...

What also doesn't help is that if Megapixels 2.0 were finished and released it would also create a whole new wave of criticism and comparisons to libcamera. I would have to support Megapixels for the people complaining that it's not enough... You could've not had a camera application at all...

It also doesn't help that the libcamerea developers are also the v4l2 subsystem maintainers in the kernel. I have during development of libmegapixels tried sending a simple patch for an issue I've noticed that would massively improve the ease of debugging PinePhone Pro cameras. I've sent this 3 character patch upstream to the v4l2 mailing lists and it got a Reviewed-by in a few days.

Then after 2 whole months of radio silence it got rejected by the lead developer of libcamera on debatable grounds. Now this is only a very small patch so I'm merely dissapointed. If I had put more work into the kernel side improving some sensor drivers I might have been mad but at this point I'm just not feeling like contributing to the camera ecosystem anymore.

Edit: I've been convinced to actually try to do this full-time and push the codebase forward enough to make it usable. This is continued at https://blog.brixit.nl/adding-hardware-to-libmegapixels/