Since in the last post I only showed off the libmegapixels config format and made some claims about configurablility without demonstrating it. I thought that it might be a good idea to actually demonstrate and document it.

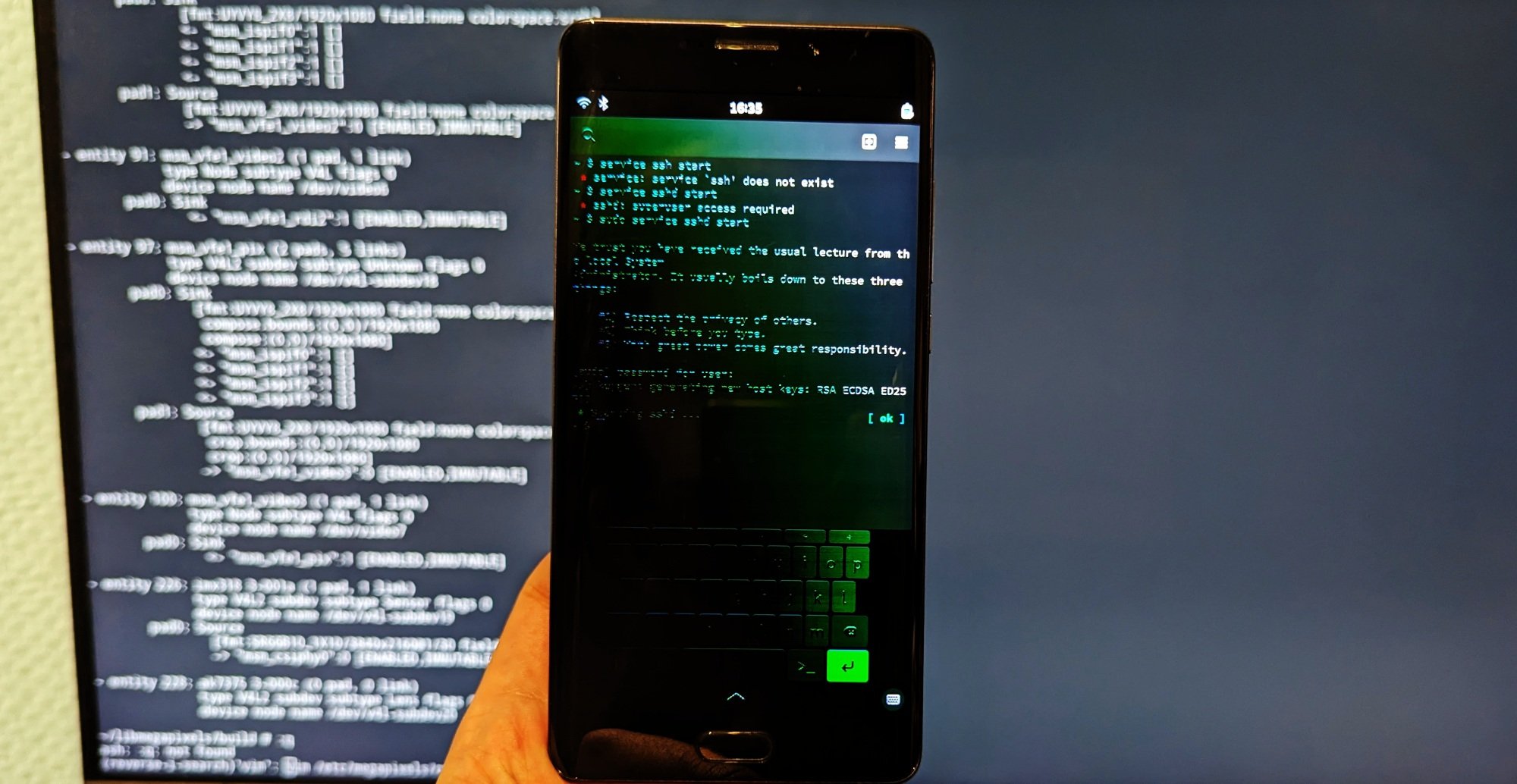

As example device I will use my Xiaomi Mi Note 2 with a broken display, shown above. Also known in PostmarketOS under the codename xiaomi-scorpio. I picked this device as demo since I have already used this hardware in Megapixels 1.x so I know the kernel side of it is functional. I have not run any libmegapixels code on this device before writing this blogpost so I'm writing it as a I go along debugging it. Hopefully this device does not require any ioctl that has not been needed by the existing supported devices.

What makes it possible to get camera output from this phone is two things:

- The camera subsystem in this device is supported pretty well in the kernel, in this case it's a Qualcomm device which has a somewhat universal driver for this

- The sensor in this phone has a proper driver

The existing devices that I used to develop libmegapixels are based around the Rockchip, NXP and Allwinner platforms so this will be an interesting test if my theory works.

The config file name

Just like Megapixels 1.x the config file is based around the "compatible" name of the device. This is defined in the device tree passed to Linux by the bootloader. Since this is a nice mainline Linux device this info can be found in the kernel source: https://github.com/torvalds/linux/blob/b85ea95d086471afb4ad062012a4d73cd328fa86/arch/arm64/boot/dts/qcom/msm8996pro-xiaomi-scorpio.dts#L17

compatible = "xiaomi,scorpio", "qcom,msm8996pro", "qcom,msm8996";This device tree specifies three names for this device ranking from more specific to less specific. xiaomi,scorpio is the exact hardware name, qcom,msm8996pro is the variant of the SoC and the qcom,msm8996 name is the inexact name of the SoC. Since this configuration defined both the SoC pipeline and the configuration for the specific sensor module the only sane option here is xiaomi,scorpio since that describes that exact hardware configuration. Other msm8996 devices might be using a completely different sensor.

The most specific option is not always the best option, in the case of the PinePhone for example the compatible is:

"pine64,pinephone-1.1", "pine64,pinephone", "allwinner,sun50i-a64";In this hardware the camer system for the 1.0, 1.1 and 1.2 revision is identical so the config file just uses the pine64,pinephone name.

Knowing this the config file name will be xiaomi,scorpio.conf and can be placed in three locations. /usr/share/megapixels/config, /etc/megapixels/config and just the plain filename in your current working directory.

Now we know what the config path is the hard part starts, figuring out what to put in this config file.

The media pipeline

The next step is figuring out the media pipeline for this device. If the kernel has support for the hardware in the device it should create one or more /dev/media files. In the case of the Scorpio there's only a single one for the camera pipeline but there might be additional ones for stuff like hardware accelerated video encoding or decoding.

You can get the contents of the media pipelines with the media-ctl utility from v4l-utils. Use media-ctl -p to print the pipeline and you can use the -d option to choose another file than /dev/media0 if needed. For the Scorpio the pipeline contents are:

Media controller API version 6.1.14

Media device information

------------------------

driver qcom-camss

model Qualcomm Camera Subsystem

serial

bus info platform:a34000.camss

hw revision 0x0

driver version 6.1.14

Device topology

- entity 1: msm_csiphy0 (2 pads, 5 links)

type V4L2 subdev subtype Unknown flags 0

device node name /dev/v4l-subdev0

pad0: Sink

[fmt:UYVY8_2X8/1920x1080 field:none colorspace:srgb]

<- "imx318 3-001a":0 [ENABLED,IMMUTABLE]

pad1: Source

[fmt:UYVY8_2X8/1920x1080 field:none colorspace:srgb]

-> "msm_csid0":0 []

-> "msm_csid1":0 []

-> "msm_csid2":0 []

-> "msm_csid3":0 []

[ Removed A LOT of entities here for brevity ]

- entity 226: imx318 3-001a (1 pad, 1 link)

type V4L2 subdev subtype Sensor flags 0

device node name /dev/v4l-subdev19

pad0: Source

[fmt:SRGGB10_1X10/5488x4112@1/30 field:none colorspace:raw xfer:none]

-> "msm_csiphy0":0 [ENABLED,IMMUTABLE]

- entity 228: ak7375 3-000c (0 pad, 0 link)

type V4L2 subdev subtype Lens flags 0

device node name /dev/v4l-subdev20

The header shows that this is a media device for the qcom-camss system, which handles cameras on Qualcomm devices. There is also a node for the imx318 sensor which further confirms that this is the right media pipeline.

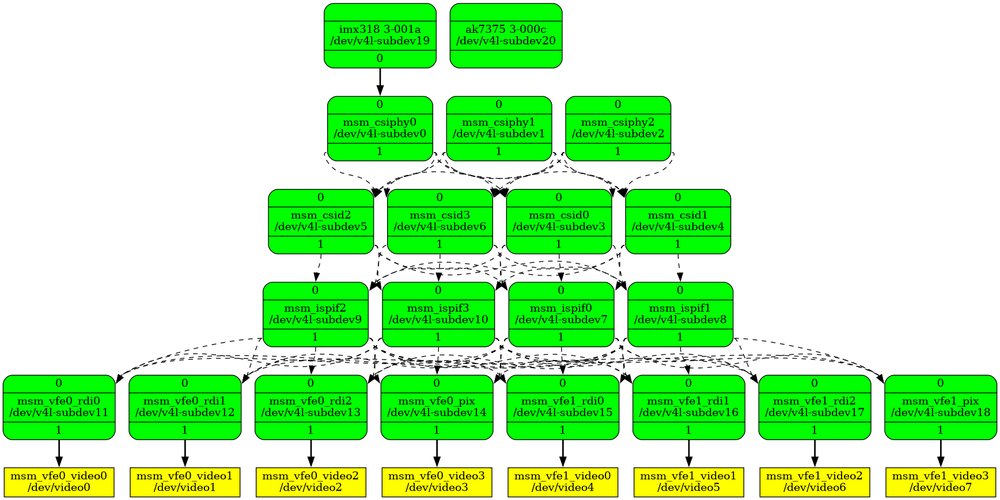

Analyzing the pipeline in this format is pretty hard when there's more than two nodes though, that's why there is a neat option in media-ctl to output the mediagraph as an actual graph using Graphviz.

$ apk add graphviz

$ media-ctl -d 0 --print-dot | dot -Tpng > pipeline.pngWhich produces this image:

In a bunch of cases you can copy most of the configuration of this graph from another device that uses the same SoC but since this is the first Qualcomm device I'm adding I have to figure out the whole pipeline.

The only part that's really specific to the Xiaomi Scorpio is the top two nodes. The imx318 is the actual camera module in the phone connected with mipi to the SoC. The ak7375 is listed as a "Motor driver". This means that it is the chip handeling the lens movements for autofocus. There are no connections to this node since this device does not handle any graphical data, the entity only exists so you can set v4l control values on it to move the focus manually.

All the boxes in the graph are called entities and correspond with the Entity blocks in the media-ctl -p output. The boxes are yellow if they are entities with the type V4L, these are the nodes that will show up als /dev/video nodes to actually get the image data out of this pipeline.

The lines between the boxes are called links, the dotted lines are disabled links and solid lines are enabled links. On this hardware a lot of the links are created by the kernel driver and are hardcoded. These links show up in the text output as IMMUTABLE and mostly describe fixed hardware paths for the image data.

The goal of configuring this pipeline is to get the image data from the IMX sensor all the way down to one of the /dev/video nodes and figuring out the purpose of the entities in between. If you are lucky there is actual documentation for this. In this case I have found documentation at https://www.kernel.org/doc/html/v4.14/media/v4l-drivers/qcom_camss.html which is for the v4.14 kernel but for some reason is removed on later releases.

This documentation has neat explanations for these entities:

- 2 CSIPHY modules. They handle the Physical layer of the CSI2 receivers. A separate camera sensor can be connected to each of the CSIPHY module;

- 2 CSID (CSI Decoder) modules. They handle the Protocol and Application layer of the CSI2 receivers. A CSID can decode data stream from any of the CSIPHY. Each CSID also contains a TG (Test Generator) block which can generate artificial input data for test purposes;

- ISPIF (ISP Interface) module. Handles the routing of the data streams from the CSIDs to the inputs of the VFE;

- VFE (Video Front End) module. Contains a pipeline of image processing hardware blocks. The VFE has different input interfaces. The PIX (Pixel) input interface feeds the input data to the image processing pipeline. The image processing pipeline contains also a scale and crop module at the end. Three RDI (Raw Dump Interface) input interfaces bypass the image processing pipeline. The VFE also contains the AXI bus interface which writes the output data to memory.

This documentation is not for this exact SoC so the amount of entities of each type is different.

Configuring the pipeline and connecting it all up is now just a lot of trial and error, in the case of the Scorpio it has already been trial-and-error'd so there is an existing config file for the old Megapixels at https://gitlab.com/postmarketOS/megapixels/-/blob/master/config/xiaomi,scorpio.ini

In this old pipeline description format the path is just enabling the links between the first csiphy, csid, ispif and vfe entity. Since this release of Megapixels did not really support further configuration it just tried to then set the resolution and pixel format for the sensors on all entities after it and hoped it worked. On an unknown platform just picking the left-most path will pretty likely bring up a valid pipeline, the duplicated entities are mostly useful for cases where you are using multiple cameras at once.

Initial config file

The first thing I did is creating a minimal config file for the scorpio that had the minimal pipeline to stream unmodified data from the sensor to userspace.

Version = 1;

Make: "Xiaomi";

Model: "Scorpio";

Rear: {

SensorDriver: "imx318";

BridgeDriver: "qcom-camss";

Modes: (

{

Width: 3840;

Height: 2160;

Rate: 30;

Format: "RGGB10";

Rotate: 90;

Pipeline: (

{Type: "Link", From: "imx318", FromPad: 0, To: "msm_csiphy0", ToPad: 0},

{Type: "Link", From: "msm_csiphy0", FromPad: 1, To: "msm_csid0", ToPad: 0},

{Type: "Link", From: "msm_csid0", FromPad: 1, To: "msm_ispif0", ToPad: 0},

{Type: "Link", From: "msm_ispif0", FromPad: 1, To: "msm_vfe0_rdi0", ToPad: 0},

{Type: "Mode", Entity: "imx318"},

{Type: "Mode", Entity: "msm_csiphy0"},

{Type: "Mode", Entity: "msm_csid0"},

{Type: "Mode", Entity: "msm_ispif0"},

);

},

);

};

This can be tested with the megapixels-getframe command.

$ ./megapixels-getframe

Using config: /etc/megapixels/config/xiaomi,scorpio.conf

[libmegapixels] Could not link 226 -> 1 [imx318 -> msm_csiphy0]

[libmegapixels] Capture driver changed pixfmt to UYVY

Could not select mode

This command tries to output as much debugging info as possible, but the reality is that you'll most likely need to look at the kernel source to figure out what is happening and what arbitrary constraints exist.

So the iterating and figuring out errors starts. First the most problematic line is the UYVY format one. This most likely means that the pipeline pixelformat I selected was not correct and to fix that the kernel helpfully selects a completely different one. getframe will detect this and show this happening. In this case the RGGB10 format is wrong and it should have been RGGB10p. The kernel implementation is a bit inconsistent about which format it actually is while MIPI only allows one of these two in the spec. Changing that removes that error.

The other interesting error is the link that could not be created. If you look closely at the Graphviz output you'll see that this link is already enabled by the kernel and in the text output it is also IMMUTABLE. This config line can be dropped because this is not configurable.

$ ./megapixels-getframe

Using config: /etc/megapixels/config/xiaomi,scorpio.conf

VIDIOC_STREAMON failed: Broken pipe

Progress! At least somewhat. The mode setting commands succeed but now the pipeline can not actually be started. This is because some drivers only validate options when starting the pipeline instead of when you're actually setting modes. This is one of the most annoying errors to fix because there's no feedback whatsoever on what or where the config issue is.

My suggestion for this is to first run media-ctl -p again and see the current state of the pipeline. This output shows the format for the pads of the pipeline so you can find a connection that might be invalid by comparing those. My pipeline state at this point is:

imx318:SRGGB10_1X10/3840x2160@1/30csiphy0:SRGGB10_1X10/3840x2160csid0:SRGGB10_1X10/3840x2160ispif0:SRGGB10_1X10/3840x2160vfe0_rdi0:UYVY8_2X8/1920x1080

AHA! the last node is not configured correctly. It's always the last one you look at. It turns out the issue was that I'm simply missing a mode command in my config file that sets the mode on that entity so it's left at the pipeline defaults. Let's test the pipeline with that config added:

$ /megapixels-getframe

Using config: /etc/megapixels/config/xiaomi,scorpio.conf

received frame

received frame

received frame

received frame

received frame

The pipeline is streaming! This is the bare minimum configuration needed to make Megapixels 2.0 use this camera. For reference after all the changes above the config file is:

Version = 1;

Make: "Xiaomi";

Model: "Scorpio";

Rear: {

SensorDriver: "imx318";

BridgeDriver: "qcom-camss";

Modes: (

{

Width: 3840;

Height: 2160;

Rate: 30;

Format: "RGGB10p";

Rotate: 90;

Pipeline: (

{Type: "Link", From: "msm_csiphy0", FromPad: 1, To: "msm_csid0", ToPad: 0},

{Type: "Link", From: "msm_csid0", FromPad: 1, To: "msm_ispif0", ToPad: 0},

{Type: "Link", From: "msm_ispif0", FromPad: 1, To: "msm_vfe0_rdi0", ToPad: 0},

{Type: "Mode", Entity: "imx318"},

{Type: "Mode", Entity: "msm_csiphy0"},

{Type: "Mode", Entity: "msm_csid0"},

{Type: "Mode", Entity: "msm_ispif0"},

{Type: "Mode", Entity: "msm_vfe0_rdi0"},

);

},

);

};Camera metadata

The config file not only stores information about the media pipeline but can also store information about the optical path. Every mode can define the focal length for example because changing the cropping on the sensor will give you digital zoom and thus a longer focal length. With modern phones with 10 cameras on the back it is also possible to define all of them as the "rear" camera and have multiple modes with multiple focal lengths so camera apps can switch the pipeline for zooming once zooming is implemented in the UI.

Finding out the values for this optical path is basically just using search engines to find datasheets and specs. Sometimes the pictures generated by android have the correct information for this in the metadata as well.

This information is also mostly absent from sensor datasheets since that only describe the sensor itself, you either need to find this info from the camera module itself (which is the sensor plus the lens) or the specifications for the phone.

From spec listings and review sites I've found that the focal length for the rear camera is 4.06mm and the aperture is f/2.0. This can be added to the mode section:

Width: 3840;

Height: 2160;

Rate: 30;

Format: "RGGB10p";

Rotate: 90;

FocalLength: 4.06;

FNumber: 2.0;Reference for pipeline commands

Since this is now practically the main reference for writing config files until I get documentation generation up and running for libmegapixels I will put the complete documentation for the various commands here.

While parsing the config file there are four values stored as state : width, height, format and rate. The values for these default to the ones set in the mode and they are updated whenever you define one of these values explicitly in a command. This prevents having to write the same resolution values repeatedly on every line but it still allows having entities in the pipeline that scale the resolution.

Link

{

Type: "Link",

From: "msm_csiphy0", # Source entity name, required

FromPad: 1, # Source pad, defaults to 0

To: "msm_csid0", # Target entity name, required

ToPad: 0 # Target pad, defaults to 0

}Translates to an MEDIA_IOC_SETUP_LINK ioctl on the media device.

Mode

{

Type: "Mode",

Entity: "imx318" # Entity name, required

Width: 1280 # Horisontal resolution, defaults to previous in pipeline

Height: 720 # Vertical resolution, defaults to previous in pipeline

Pad: 0 # Pad to set the mode on, defaults to 0

Format: "RGGB10p" # Pixelformat for the mode, defaults to previous in pipeline

}Translates to an VIDIOC_SUBDEV_S_FMT ioctl on the entity.

Rate

{

Type: "Rate",

Entity: "imx318", # Entity name, required

Rate: 30 # FPS, defaults to previous in pipeline

}Translates to an VIDIOC_SUBDEV_S_FRAME_INTERVAL ioctl on the entity.

Crop

{

Type: "Crop",

Entity: "imx318", # Entity name, required

Width: 1280 # Area width, defaults to previous width in pipeline

Height: 720 # Area height resolution, defaults to previous height in pipeline

Top: 0 # The vertical offset, defaults to 0

Left: 0 # The horisontal offset, defaults to 0

Pad: 0 # Pad to set the crop on, defaults to 0

}Translates to an VIDIOC_SUBDEV_S_CROP ioctl on the entity.

The future of libmegapixels

It has been quite a bit of work to create libmegapixels and it has been a mountain of work to rework Megapixels to integrate it. The first 90% of this is done but the trick is always in getting the second 90% finished. In the Megapixels 2.0 post I already mentioned this has burned me out. On the other hand it's a shame to let this work go to waste.

There is a few parts of autofocus, autoexposure and autowhitebalance that are very complicated and math heavy to figure out, I can't figure it out. The loop between libmegapixels and Megapixels exists to pass around the values but I can't stop the system from oscillating and can't get it to settle on good values. There seems to be no good public information available on how to implement this in any case.

Another difficult part is sensor calibration. I have the hardware and software to create calibration profiles but this system expects the input pictures to come from... working cameras. The system completely lacks proper sensor linearisation which makes setting a proper whitebalance not really possible. You might have noticed the specific teal tint that gives away that a picture is taken on a Librem 5 for example. If that teal tint is corrected for manually then the midtones will look correct but highlights will become too yellow. Maybe there's a way to calibrate this properly or maybe this just takes someone messing with the curves manually for a long while to get correct.

There also needs to be an alternative to writing dng files with libtiff so for my own sanity it is required to write libdng. The last few minor releases of libtiff have all been messing with the tiff tags relating to DNG files which have caused taking pictures to not work for a lot of people. The only way around this seems to be stop using libtiff like all the Linux photography software has already done. This is not a terribly hard thing to implement, it just has been prioritized below getting color correct so far and I have not had the time to work on it.

There is also still segfaults and crashes relating to the GPU debayer code in Megapixels for most of the pixel formats. This is very hard to debug due to the involvement of the GPU in the equation.

How can you help

If you know how to progress with any of this I gladly accept any patches for this to push it forward.

The harder part of this section is... money. I love working on photography stuff, I can't believe the Megapixels implementation has even gotten this far but it basically takes me hyperfocusing for weeks for 12 hours per day on random camera code to get to this point, that is not really sustainable. It's great to work on this for some days and making progress, it's really painful to work for weeks on that one 30 line code block and making no progress whatsoever. At some point my dream is that I can actually live off doing open source work but so far that has still been a distant dream.

I've had the donations page now for some years and I'm incredibly happy that people are supporting me to work on this at all. It's just forever stuck on receiving enough money that you feel like a responsibility to produce progress but not nearly enough to actually fund that progress. So in practice only extra pressure.

So I hate asking for money, but it would certainly help towards the dream of being an actual full time FOSS developer :)