I've been working on the code that has become libmegapixels for a bit more as a year now. It has taken several thrown-away codebases to come to a general architecture I was happy with and it it has been quite a task to split off media pipeline tasks from the original Megapixels codebase.

After staring at this code for many months I thought I've made libmegapixels a nearly perfect little library. That's the problem with working on a codebase without anyone else looking at it.

About two weeks ago libmegapixels and the general Megapixels 2.x codebase had it's first contact with external contributors and that has put a spotlight on all the low hanging fruit in documentation and codebase issues. A great example of that is this commit:

diff --git a/src/parse.c b/src/parse.c

index bfea3ec..93072d0 100644

--- a/src/parse.c

+++ b/src/parse.c

@@ -403,6 +403,8 @@ libmegapixels_load_file(libmegapixels_devconfig *config, const char *file)

config_init(&cfg);

if (!config_read_file(&cfg, file)) {

fprintf(stderr, "Could not read %s\n", file);

+ fprintf(stderr, "%s:%d - %s\n",

+ config_error_file(&cfg), config_error_line(&cfg), config_error_text(&cfg));

config_destroy(&cfg);

return 0;

}

A simple patch that massively improves the usablility for people writing libmegapixels config files: Actually printing the parsing errors from libconfig when a file could not be read. Because I generally run libmegapixels through the IDE and have all the syntax highlighting etc set up for the files I simply haven't triggered this codepath enough to actually implement this part.

These last two weeks there have also been some significantly more complicated fixes like tracing segfault issues in Megapixels 2.x which helps a lot with getting the new codebase ready for daily use. Figuring out some API issues in libmegapixels like not correctly setting camera indexes in the returned data. Also the config files have now been updated to work with the latest versions of the PinePhone Pro kernel instead of the year old build I've been developing against.

Video recording

I've been saying for a long time that video recording on the PinePhone won't be possible, especially not to the level of support on Android and iOS due to hardware limitations. The only real hope for proper video recording would be that someone gets H.264 hardware encoding to work on the A64 processor.

I can happily report that I was wrong. Pavel Machek has made significant progress in PinePhone video recording with a few large contributions that implement the UI bits to add video recording. A new second postprocessing pipeline for running external video encoding scripts just like Megapixels already lets you write your own custom scripts for processing the raw pictures into JPEGs.

Video recording is a complicated issue though, mainly due to the sheer amount of data that needs to be processed to make it work smoothly. On the maximum resultion of the sensor in the PinePhone the framerate isn't high enough for recording normal videos (unless you enjoy 15fps video files) but on lower resolutions the pipeline can run at normal video framerates. The maximum framerates from the sensor for this are 1080p at 30fps and 720p at 60fps.

For 720p60 the bandwidth of the raw sensor data is 442 Mbps and for 1080P30 this is 497 Mbps. This is a third of the expected bandwidth because the raw sensor data is essentially a greyscale image where every pixels has a different color filter in front. This is too much data to write out to the eMMC or SD card to process later and the PinePhone also struggles already to encode 720p30 video live without even running a desktop environment.

There are two implementations of video recording right now. One that saves the raw DNG frames to a tmpfs since RAM is the only thing that can keep up with the data rate. This should give you roughly 30 seconds of video recording capabilities and after that recording time it will take a while to actually encode the video.

Pavel has posted an example of this video recording on his mastodon.

The second way is putting the sensor in a YUV mode instead of raw data. This gives worse picture quality on the sensor in the PinePhone but the data format matches more closely to the way frames are stored in video files so the expensive debayer step can be skipped while video recording. This together with encoding H.264 video with the ultrafast preset should make it just about possible to record real-time encoded video on the PinePhone.

Many thanks

It's great to see contributions to Megapixels 2 and libmegapixels. It's a big step towards getting the Megapixels 2.x codebase production ready and it's simply a lot more fun to work on a project together with other people.

It's great to have contributors working on the UI code, the camera support fixes for devices and the many bugfixes to the internals. It's also very helpful to actually have issues created by people building and testing the code on other distributions. This already ironed out a few issues in the build system.

There also has been some nice contributions to the Megapixels 1.x codebase, all of those should by now already have been merged into your favorite PinePhone distribution :)

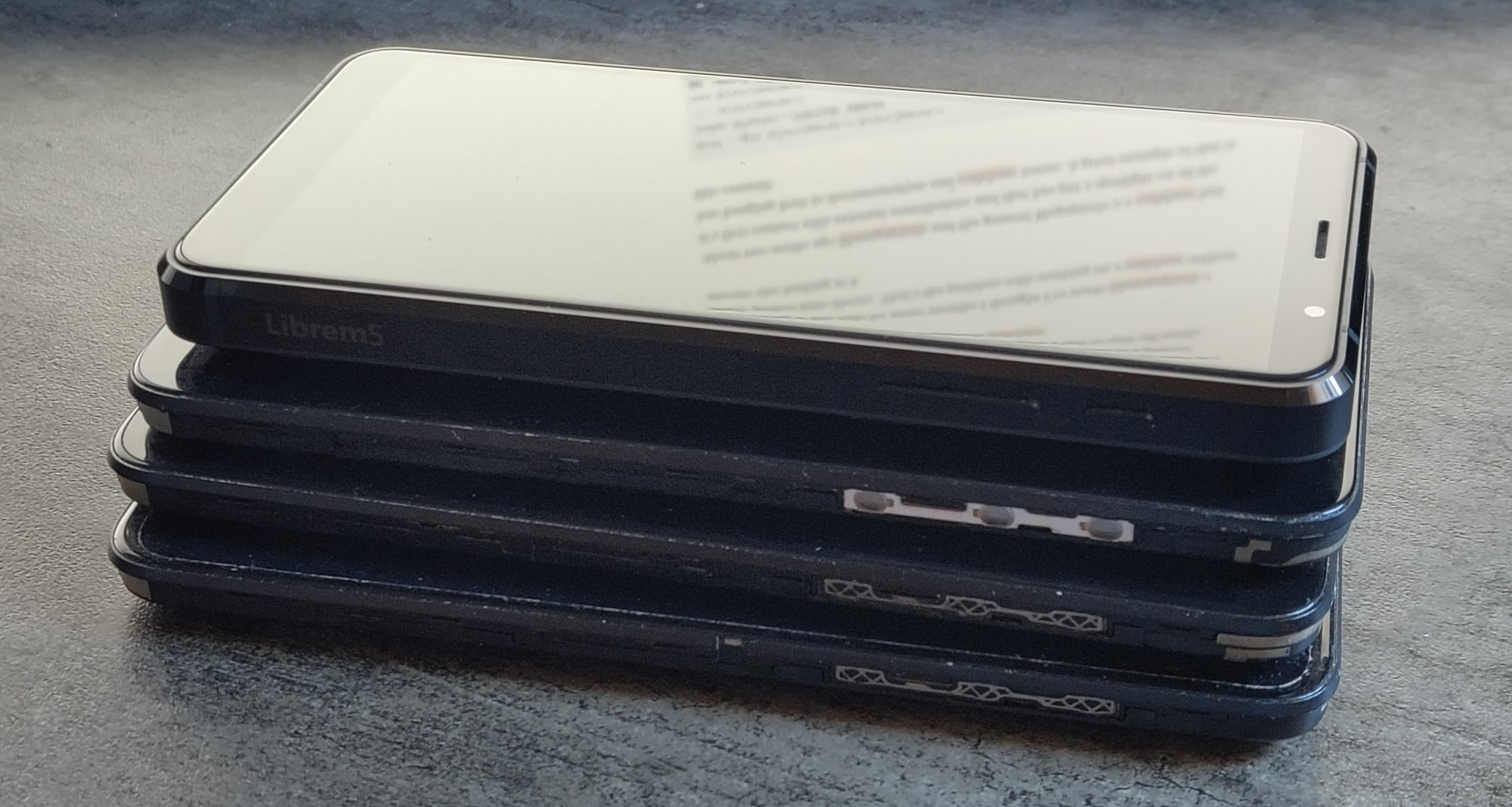

The last few Megapixels update blogposts have all been around Megapixels 2.x and the supporting libraries so none of the improvements are immediately usable by actual PinePhone{,Pro} and Librem 5 users until there is an actual release. It will take a bunch more polish until feature parity with Megapixels 1.x is reached.