Many who read this will probably not know DNG beyond "the annoying second file Megapixels produces". DNG stands for Digital Negative, an old standard made by Adobe to store the "raw" files from cameras.

The standard has good ideas and it is even an open standard. There's a history of the DNG development on the wikipedia page that details the timeline and goals of this new specification. My problem with the standard is also neatly summarized in one line of this article:

Format based on open specifications and/or standards: DNG is compatible with TIFF/EP, and various open formats and/or standards are used, including Exif metadata, XMP metadata, IPTC metadata, CIE XYZ coordinates and JPEG

This looks great at first glance, more standards! Reusing existing technologies! The issue is that it's so many standards though.

TIFF

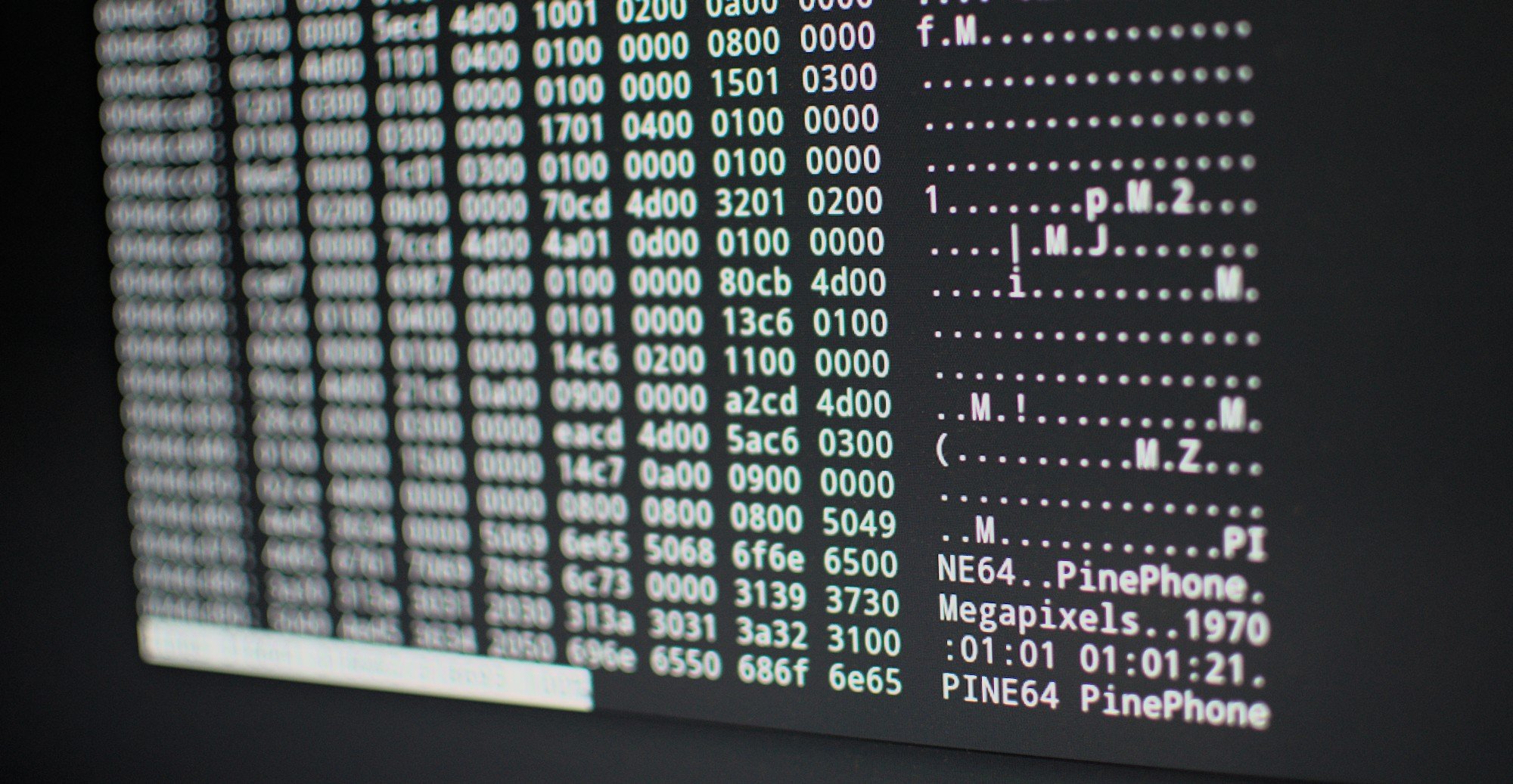

DNG is basically nothing more than a set of conventions around TIFF image files. This is possible because TIFF is an incredibly flexible format. The problem is that TIFF is an incredibly flexible format. The format is flexible to the point that it's completely arbitrary where your data is. The only thing that's solid is the header that describes that the files is a TIFF file and a pointer to the first IFD chunk. The ordering of image data and IFD chunks within the file is completely arbitrary. If you want to store all the pixels for the image directly after the header and then have the metadata at the end of the file, that's completely possible. If you want to have half the metadata before the image and half after it, completely valid. As long as the IFD points to the right next offset in the file for another IFD and the IFD points to the right start of image data.

This makes parsing a TIFF file more complicated. It's not really possible to parse TIFF from a stream unless you buffer the full file first, since it's basically a filesystem that contains metadata and images.

This format supports having any number of images inside a single file and every image can have its own metadata attached and it's own encoding. This is used to store thumbnails inside the image for example. The format not just supports having multiple images, it supports an actual tree of image files and blobs of metadata.

Every image in a TIFF file can have a different colorspace, color format, byte ordering, compression and bit depth. This is all without adding any of the extensions to the TIFF format.

To get information about every image in the file there's the TIFF metadata tags. The tags a number for the identifier and one or more values. Every extension and further version of the TIFF specification adds more tags to describe more detailed things about the image. And the DNG specification also adds a lot of new tags to store information about raw sensor data.

All these tags are not enough though, There's more standards to build upon! There's a neat tag called 0x8769, also known as "Exif IFD". This is a tag that is a pointer to another IFD that contains EXIF tags, from jpeg fame, that also describe the image. To make things complete the information that you can describe with TIFF tags and with EXIF tags overlaps and can ofcourse contradict eachother in the same file.

The same way it is also possible to add XMP metadata to an image. This is made possible by the combination of letters developers will start to fear: TIFFTAG_XMLPACKET. Because everything is better with a bit of XML sprinkled on top.

Then lastly there's the IPTC metadataformat which I luckily have never heard of and never encountered and I look forward to never learning about it.

Shit I looked it up anyway, This is a standard for... what... newspaper metadata? Let's quickly close this tab.

Writing raw sensor data to a file

So what would be the bare minimum to just write sensor dumps to a file. Obviously that's just cat sensor > picture but that will lack the metadata to actually show the picture.

The minimum data to render something that looks roughly like a picture would be:

- width and height of the image

- pixel format as fourcc

- optionally the color matrices for making the color correct

The first two are simple. This would just be 2 numbers for the dimensions since it's unlikely that 3 dimensional pictures would be supported , and the pixel format can be encoded as the 4 ascii characters representing the pixel format. The linux kernel has a lot of them defined already in the v4l2 subsystem already.

To do proper color transforms a lot more metadata would be needed which would probably mean that it's smarter to have a generic key/value storage in the format.

This format can be extremely simple to read and write except for the extra metadata that needs a bit of flexibility. The extra metadata should probably be some encoding that saves number of entries, the key length and the value length and write that as length prefixed strings.

The absolute minimum to test a sensor would be writing 16 bytes which can even be done by hand to make a header for a specific resolution and then append the sensor bytes to that.

The hard part

Making up a random image file format is easy, getting software to support it is hard. Luckily there are open source image editors and picture editors, so some support could always be patched in initially for testing. Also this has quite a high XKCD-927 factor.

Still would be great to know why a file format for this could not be this simple.