So in the previous post I mentioned the next step was figuring out the job description language... Instead of that I implemented the daemon that sits between the hardware and the central controller.

The original design has a daemon that connects to the webinterface and hooks up to all the devices connected to the computer. This works fine for most things but it also means that to restart this daemon in production all the connected devices have to be idle or all the jobs have to be aborted. This can be worked around by having a method to hot-reload configuration for the daemon and deal with the other cases that would require a restart. I opted for the simpeler option of just running one instance of the daemon for every connected device.

The daemon is also written in Python, like the other tools. It runs a networking thread, hardware monitoring thread and queue runner thread.

Message queues

In order to not have to poll the webinterface for new tasks a message queue or message bus is required. There are a lot of options available to do this so I limited myself to two options I had already used. Mosquitto and RabbitMQ. These have slightly different feature sets but basically do the same thing. The main difference is that RabbitMQ actually implements a queue system where tasks are loaded into and can be picked from the queue by multiple clients. Clients then ack and nack tasks and tasks get re-queued when something goes wrong. This essentially duplicates quite a few parts of the existing queue functions already in the central controller. Mosquitto is way simpler, it deals with messages instead of tasks. The highest level feature the protocol has is that it can guarantee a message is delivered.

I chose Mosquitto for this reason. The throughput for the queue is not nearly high enough that something like RabbitMQ is required to handle the load. The message bus feature of Mosquitto can be used to notify the daemon that a new task is available and then the daemon can fetch the full data over plain old https.

The second feature I'm using the message bus for is streaming the logs. Every time a line of data is transmitted from the phone over the serial port the daemon will make that a mqtt message and send it to the RabbitMQ daemon running on the same machine as the webinterface. The webinterface daemon is subscribed to those messages and stores them on disk, ready to render when the job page is requested.

With the current implementation the system creates one topic per task and the daemon sends the log messages to that topic. One feature that can be used to make the webinterface more efficient is the websocket protocol support in the mqtt daemon. With this it's no longer required to reload the webinterface for new log messages or fetch chunks through ajax. When the page is open it's possible to subscribe to the topic for the task with javascript and append log messages as they stream in in real time.

Authentication

With the addition of a message bus, it's now required to authenticate to that as well, increasing the set-up complexity of the system. Since version 2 there's an interesting plugin bundled with the Mosquitto daemon: dynsec.

With this plugin accounts, roles and acls can be manipulated at runtime by sending messages to a special topic. With this I can create dynamic accounts for the controllers to connect to the message bus and relay that information on request from the http api to the controller to request on startup.

One thing missing from this is that the only way to officially use dynsec seems to be the mosquitto_ctrl commandline utility to modify the dynsec database. I don't like shelling out to executables to get things done since it adds more dependencies outside the package manager for the main language. The protocol used by mosquitto_ctrl is quite simple though, not very well documented but easy to figure out by reading the source.

Flask-MultiMQTT

To connect to Mosquitto from inside a Flask webapplication the most common way is with the Flask-MQTT extension. This has a major downside though that's listed directly at the top of the Flask-MQTT documentation; It doesn't work correctly in a threading Flask application, it also fails when hot-reload is enabled in Flask because that spawns threads. This conflicts a lot with the other warning in Flask itself which is that the built-in webserver in flask is not a production server. The production servers are the threading ones.

My original plan was to create an extension to do dynsec on top of Flask-MQTT but looking at the amount of code that's actually in Flask-MQTT and the downsides it has I would have to work around I decided to make a new extension for flask that does handle threading. The Flask-MultiMQTT is available on pypi now and has most of the features of the Flask-MQTT extension and the extra features I needed. It also includes helpers for doing runtime changes to dynsec.

Some notable changes from Flask-MQTT are:

- Instead of the list of config options like

MQTT_HOSTetc it can get the most important ones from theMQTT_URIoption in the formatmqtts://username:password@hostname:port/prefix. - Support for a

prefixsetting that is prefixed to all topics in the application to have all the topics for a project namespaced below a specific prefix. - Integrates more with the flask routing. Instead of having the

@mqtt.on_message()decorator (and the less documented@mqtt.on_topic(topic)decorators where you still have to manually subscribe there is an@mqtt.topic(topic)decorator that subscribes on connection and handles wildcards exactly like flask does with@mqtt.topic("number-topic/<int:my_number>/something"). - It adds a

mqtt.topic_for()function that acts likeflask.url_for()but for topics subscribed with the decorator. This can generate the topic with the placeholders filled in like url_for() does and also supports getting the topic with and without the prefix . - Implements

mqtt.dynsec_*()functions to manipulate the dynsec database.

This might seem like overkill but it was suprisingly easy to make, even if I didn't make a new extension most time would be spend figuring out how to use dynsec and weird threading issues in Flask.

Log streaming

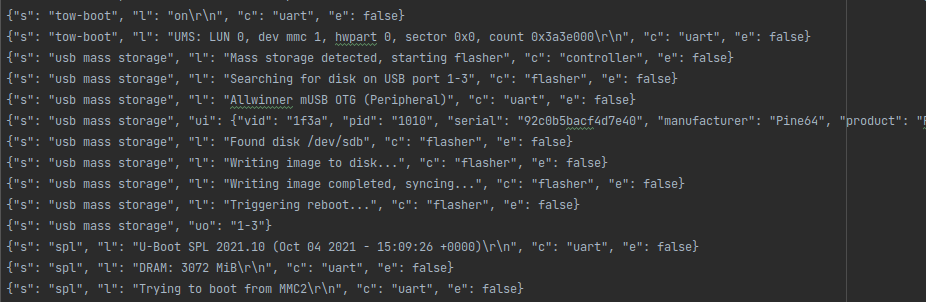

The serial port data is line-buffered and streamed to an mqtt topic for the task, but this is not as simple as just dumping the line into payload of the mqtt message and sending it off. The controller itself logs about the state of the test system and already parses UART messages to figure out where in the boot process the device is to facilitate automated flashing.

The log messages are send as json objects over the message bus. The line is annotated by the source of the message, which is "uart" most of the time. There are also more complex log messages that have the full details about USB plug events.

Beside uart passthrough messages there's also inline status messages from the controller itself when it's starting the job and flasher messages when the flasher thread is writing a new image to the device. This can be extended to have more annotated sources like having syslog messages passed along once the system is booted and if there is a helper installed.

This log streaming can also be extended to have a topic for messages in the other direction, that way it would be possible to get a shell on the running device.

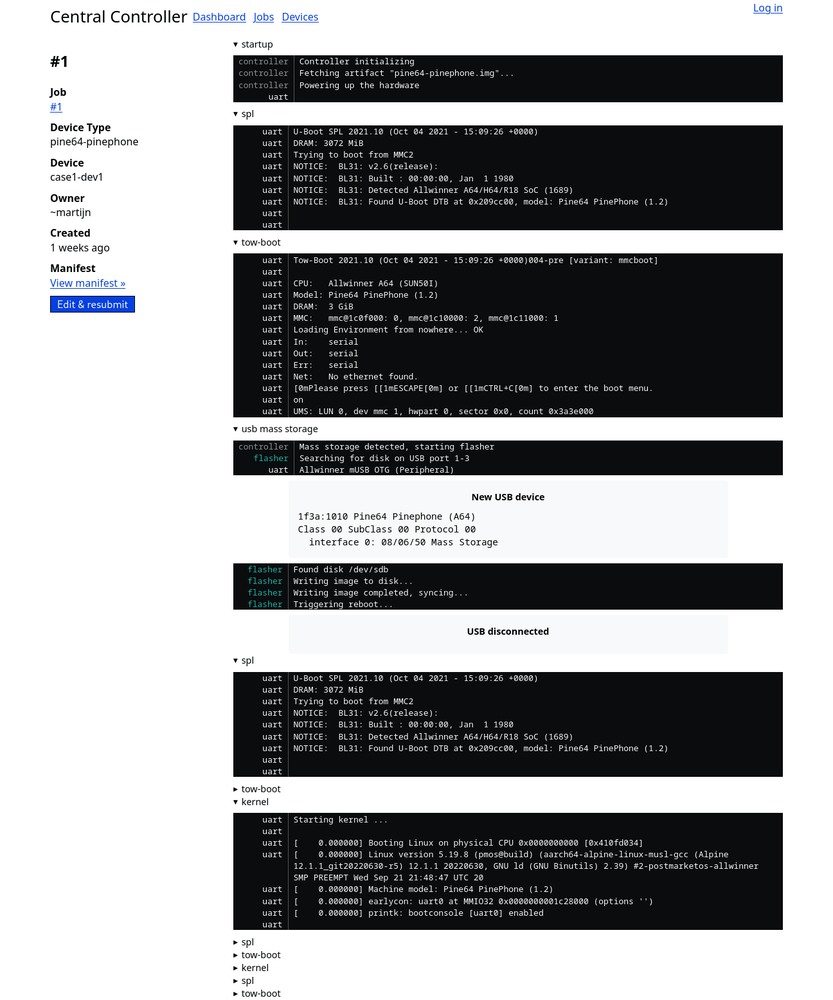

With this all together the system can split up the logs over UART in some sections based on hardcoded knowledge of log messages from the kernel and the bootloader and create nice collapsible sections.

Running test jobs

The current system still runs a hardcoded script at the controller when receiving a job instead of parsing a job manifest since I postponed the job description language. The demo above will flash the first attached file to the job, which in my case is pine64-pinephone.img, a small postmarketOS image. Then it will reboot the phone and just do nothing except pass through UART messages.

This implementation does not have a way to end jobs yet and have success/failure conditions at the end of the script. There are a few failure conditions that are implemented which I ran into while debugging this system.

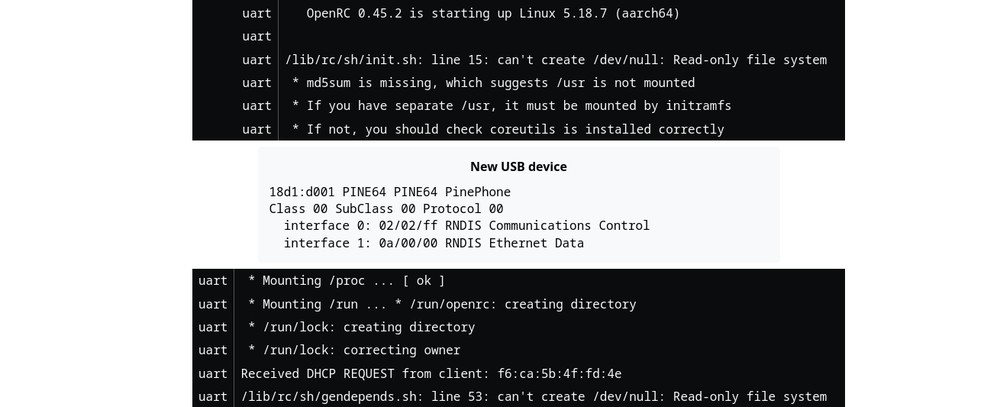

The first failure condition it can detect is a PinePhone bootlooping. Sometimes the bootloader crashes due to things like insufficient power, or the A64 SoC being in a weird state due to the last test run. When the device keeps switching between the spl and tow-boot state it will mark it as a bootloop and fail the job. Another infinite loop that can easily be triggered by not inserting the battery is it failing directly after starting the kernel. This is what is happening in the screenshot above. This is not something that can be fully automatically detected since a phone rebooting is a supported case.

To make this failure condition detectable the job description needs a way to specify if a reboot at a specific point is expected. Or in a more generic way, to specify which state transitions are allowed in specific points in the test run. With this implemented it would remove a whole category of failures that would require manual intervention to reset the system.

The third failure condition I encountered was the phone not entering the flashing mode correctly. If the system wants to go to flashing mode but the log starts outputting kernel logs it will mark it as a failure. In my case this failure was triggered because my solder joint on the PinePhone failed so the volume button was not held down correctly.

Another thing that needs to be figured out is how to pass test results to the controller. Normally in CI systems failure conditions are easy. You execute the script and if the exit code is not 0 it will mark the task as a failure. This works when executing over SSH but when running commands over UART that metadata is lost. Some solutions for this would be having a wrapper script that catches the return code and prints it in a predefined format to the UART so the logparser can detect it. Even better is bringing up networking on the system if possible so tests can be executed over something better than a serial port.

Having networking would also fix another issue: how to get job artifacts out. If job artifacts are needed and there is only a serial line. The only option is sending some magic bytes over the serial port to tell the controller it's sending a file and then dump the contents of the file with some metadata and encoding. Luckily since dial up modems were a thing people have figured out these protocols in XMODEM, YMODEM and ZMODEM.

Since having a network connection cannot be relied on the phone test system would probably need to implement both the "everything runs over a serial line" codepath and the faster and more reliable methods that use networking. For tests where networking can be brought up some helper would be needed inside the test images that are flashed that brings up USB networking like the postmarketOS initramfs does and then communicates with the controller over serial to signal it's IP address.

So next part would be figuring out the job description (again) and making the utilities for using inside the test images to help execute them.